Every morning, the traffic getting out of my neighbourhood is a nightmare. I have no patience for sitting in traffic jams while I’m in a hurry to get my kids to school and get to work.

So several years ago, I started to experiment with alternative routes.

A back route to the main road saved me a good 10 minutes – until several months later, it suddenly became as clogged as my previous route. Several other alleged shortcuts later, I believe I’ve finally hit on the optimum route – although I’ll probably have to adjust that, too, eventually!

Now, I’m not boring you with details of my morning commute for no reason. It happens to be the perfect analogy for a good digital marketing campaign.

All too often, companies launch a particular digital initiative – be it an advertising campaign, sending out regular emails, or building a social media page – and then just let it run, leaving the same campaign on forever, or posting the same content repeatedly.

The problem is that your first attempt is rarely the best. It’s like sitting in traffic, day in, day out, without ever investigating the alternative routes.

It can take time to hit on the perfect formula, and find the right way of engaging with your audience.

Just like on the road, there are lots of competitors trying to get to the same place. You have to be agile to beat them.

And things that worked for you 6 months ago may no longer be so good today. Ads get tired as your audience gets used to seeing them, audience interest changes, and so do the platforms you’re using.

Probably the biggest mistake I see marketers make is failing to optimise their activities. They believe campaigns have ‘failed’ – while in reality, they were just never perfected. Or the campaigns perform just fine, while with a bit of tweaking, they could have been amazing.

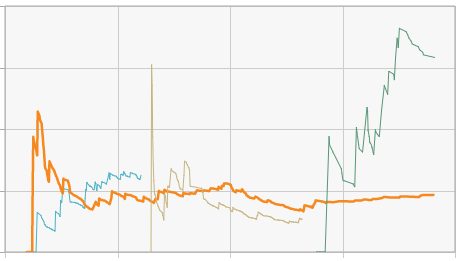

Here’s an example, from one of our own advertising campaigns, of why testing is a must:

Each line represents a different version of the same landing page. Every week or so, we take the best performing one, and then try a variation of it, to see whether it does any better.

As you can see, for a while all the versions performed more or less similarly. But last week, we found a version that converted at a dramatically higher rate than the others. (The difference was the number of fields that subscribers had to fill in.)

Next week, the challenge will be to take all of the lessons we’ve learned, and see whether we can improve on the ‘green’ landing page too.

In the meanwhile, we’re getting more ‘bang’ for our ‘buck’ – literally, getting twice as many new subscribers as last week, whilst the cost per conversion has dropped.

When you think of it that way, how can you afford to sit in that traffic?